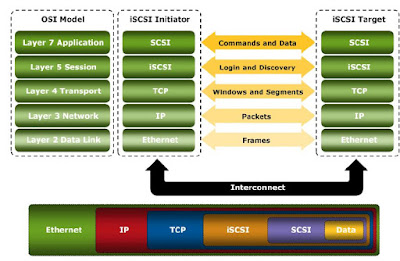

SCSI is the command protocol that works at the application layer of the Open System Interconnection (OSI) model. The initiators and the targets use SCSI commands and responses to talk to each other. The SCSI commands, data, and status messages are encapsulated into TCP/IP and transmitted across the network between the initiators and the targets.

iSCSI Architecture

The below figure displays a model of iSCSI protocol layers and depicts the encapsulation order of the SCSI commands for their delivery through a physical carrier.

iSCSI is the session-layer protocol that initiates a reliable session between devices that recognize SCSI commands and TCP/IP. The iSCSI session-layer interface is responsible for handling login, authentication, target discovery, and session management.

TCP is used with iSCSI at the transport layer to provide reliable transmission. TCP controls message flow, windowing, error recovery, and retransmission. It relies upon the network layer of the OSI model to provide global addressing and connectivity. The OSI Layer 2 protocols at the data link layer of this model enable node-to-node communication through a physical network.

iSCSI Addressing

Both the initiators and the targets in an iSCSI environment have iSCSI addresses that facilitate communication between them. An iSCSI address is comprised of the location of an iSCSI initiator or target on the network and the iSCSI name. The location is a combination of the host name or IP address and the TCP port number.

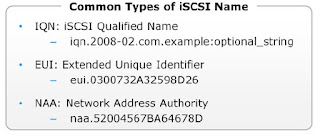

For iSCSI initiators, the TCP port number is omitted from the address. iSCSI name is a unique worldwide iSCSI identifier that is used to identify the initiators and targets within an iSCSI network to facilitate communication. The unique identifier can be a combination of the names of the department, application, manufacturer, serial number, asset number, or any tag that can be used to recognize and manage the iSCSI nodes. The following are three types of iSCSI names commonly used.

iSCSI Qualified Name (IQN): An organization must own a registered domain name to generate iSCSI Qualified Names. This domain name does not need to be active or resolve to an address. It just needs to be reserved to prevent other organizations from using the same domain name to generate iSCSI names. A date is included in the name to avoid potential conflicts caused by the transfer of domain names. An example of an IQN is iqn.2015-04.com.example:optional_string. The optional_string provides a serial number, an asset number, or any other device identifiers. IQN enables storage administrators to assign meaningful names to the iSCSI initiators and the iSCSI targets, and therefore, manages those devices more easily.

Extended Unique Identifier (EUI): An EUI is a globally unique identifier based on the IEEE EUI-64 naming standard. An EUI is composed of the eui prefix followed by a 16-character hexadecimal name, such as eui.0300732A32598D26.

Network Address Authority (NAA): NAA is another worldwide unique naming format as defined by the InterNational Committee for Information Technology Standards (INCITS) T11 - Fibre Channel (FC) protocols and is used by Serial Attached SCSI (SAS). This format enables the SCSI storage devices that contain both iSCSI ports and SAS ports to use the same NAA-based SCSI device name. An NAA is composed of the naa prefix followed by a hexadecimal name, such as naa.52004567BA64678D. The hexadecimal representation has a maximum size of 32 characters (128 bit identifier).

iSCSI Discovery

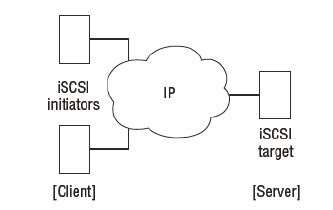

An iSCSI initiator must discover the location of its targets on the network and the names of the targets available to it before it can establish a session. This discovery commonly takes place in two ways: SendTargets discovery or internet Storage Name Service (iSNS).

iSNS: iSNS in the iSCSI SAN is equivalent in function to the Name Server in an FC SAN. It enables automatic discovery of iSCSI devices on an IP-based network. The initiators and targets can be configured to automatically register themselves with the iSNS server. Whenever an initiator wants to know the targets that it can access, it can query the iSNS server for a list of available targets.