Below are some of the frequently asked Storage (SAN) basic interview question and answers. Check the Storage Area Networks (SAN) basic & advanced concepts page in this site to learn more SAN basics.

Storage Area Networks (SAN) Basic concepts Interview Questions and Answers

31. How can u check Error in brocade switch ?

Ans :- errshow

32. Health checks in brocade switch?

Ans :- >switchshow

>switch statusshow

>switchstatuspolicyshow

>sensorshow

33. What is failover , failback ?

Ans :- FailoverProcess of switching production to a remote. (If production server fails moves to remote site)

34. What is 24 bit addressing?

Ans :- It consists of 3 octects each of 8 bits 1st octect is for domain, 2nd octect for port ID ,

3rd octect for AL-PA(Arbitrated loop physical address)

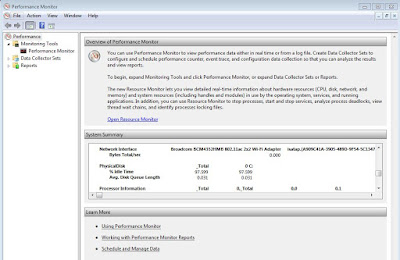

35. What is latency ?

Ans :- Time delay to reach data from source to destination

36. FLOGI,PLOGI,PRLOG?

Ans :-

PRLOGI:- processlogi providing lun access permission to host.

37. What are the class of services?

Ans :- Class 1:-Acknowledged connection oriented service

Class2:- Acknowledged connection less service

Class3:- :- UnAcknowledged connection less service

Class4:-Fraction bandwidth connection oriented service

ClassF:-Multicast service

Class6: - Switch – switchconnection less with acknowledge service.

Here Class 2,3,f are used for san technology.

38. What is FSPF?

Ans:- Fabric shortest path first path to reach destination.

39. What is FCIP,FCOE, IFCP ?

Fibre Channel Protocol (FCP) is a transport protocol (similar to TCP used in IP networks) that predominantly transports SCSI commands over Fibre Channel

Fibre Channel over IP (FCIP or FC/IP, also known as Fibre Channel tunneling or storage tunneling) is an Internet Protocol (IP) created by the Internet

Fibre Channel over Ethernet (FCoE) is a computer network technology that encapsulates Fibre Channel frames over Ethernet networks.

iFCP (Internet Fibre Channel Protocol) is an emerging standard for extending Fibre Channel storage networks across the Internet

40. What hopcount ?

Ans :- number of (Nodes)counts to reach from source to destination

41. Tell me about Fc topology ? and what is private and public?

Fc topologies are : Point-Point, FC-AL, Switched Fabric

Private : No Fabric connection

Public : attached to a ... Channel network in which up to 126 nodes are connected in a loop topology

42. What is firmware?

A: permanent software programmed into a read-only memory.

43. What is ASIC?

Ans :- Application specific integrated circuit. It is Brocade switch processor.

44. What is SSD?

Ans :- Subsystem device driver it is a multipathing software to manage both path failover and preferred path destination.

45. What is Fabric Watch?

Ans: Fabric Watch tracks a variety of SAN fabric elements, events, and counters. Continuous monitoring of the end-to-end fabric, switches, ports, Inter-Switch Links

46. Tell me led light indication in brocade switch?

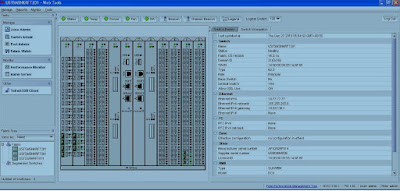

SWITCH BEACON: if it is of yellow /red then it is not working. If it indicates Green light then it’s working

47. What is NPIDV. How to assign NPIDV ?

Ans :- NodePortIDvirtualization is a technology that defines how multiple virtual servers can share a single physical fiber channel port identifier

48. What is Node?

It is an entity or device where we can connect it to the network to access services.

49. Brocade architecture?

Ans :- It consists of ASIC processor, cache , NSD RAM, Console port(DB-9) .

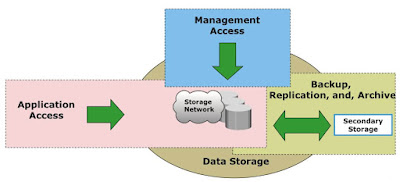

50. What is fabric?

Ans :- Collection of switches of same vendors or different vendors.

51. what are FC protocols?

Ans :- i)FCP

ii)FCIP

iii)IFCP

iv)FCOE