The information made available on a network is exposed to security threats from a variety of sources. Therefore, specific controls must be implemented to secure this information that is stored on an organization’s storage infrastructure. In order to deploy controls, it is important to have a clear understanding of the access paths leading to storage resources.

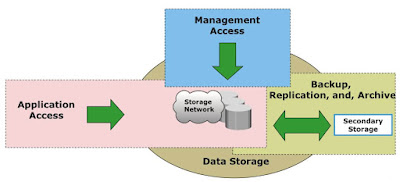

If each component within the infrastructure is considered a potential access point, the attack surface of all these access points must be analyzed to identify the associated vulnerabilities. To identify the threats that apply to a storage infrastructure, access paths to data storage can be categorized into three security domains.

If each component within the infrastructure is considered a potential access point, the attack surface of all these access points must be analyzed to identify the associated vulnerabilities. To identify the threats that apply to a storage infrastructure, access paths to data storage can be categorized into three security domains.

Image Credits - EMC

- Application Access to the stored data through the storage network. Application access domain may include only those applications that access the data through the file system or a database interface.

- Management Access to storage and interconnecting devices and to the data residing on those devices. Management access, whether monitoring, provisioning, or managing storage resources, is associated with every device within the storage environment. Most management software supports some form of CLI, system management console, or a web-based interface. Implementing appropriate controls for securing management applications is important because the damage that can be caused by using these applications can be far more extensive.

- Backup, Replication, and Archive Access primarily accessed by storage administrators who configure and manage the environment. Along with the access points in this domain, the backup and replication media also needs to be secured.

The Key Security Threats across the Domains

To secure the storage environment, identify the attack surface and existing threats within each of the security domains and classify the threats based on the security goals — availability, confidentiality, and integrity.

Also Read: The next generation IT Data Center Layers

Also Read: The next generation IT Data Center Layers

- Unauthorized access - Unauthorized access is an act of gaining access to the information systems, which includes servers, network, storage, and management servers of an organization illegally. An attacker may gain unauthorized access to the organization’s application, data, or storage resources by various ways such as by bypassing the access control, exploiting a vulnerability in the operating system, hardware, or application, by elevating the privileges, spoofing identity, and device theft.

- Denial of Service (DoS) - A Denial of Service (DoS) attack prevents legitimate users from accessing resources or services. DoS attacks can be targeted against servers, networks, or storage resources in a storage environment. In all cases, the intent of DoS is to exhaust key resources, such as network bandwidth or CPU cycles, thereby impacting production use.

- Distributed DoS (DDoS) attack - A Distributed DoS (DDoS) attack is a variant of DoS attack in which several systems launch a coordinated, simultaneous DoS attack on their target(s), thereby causing denial of service to the users of the targeted system(s).

- Data loss - Data loss can occur in a storage environment due to various reasons other than malicious attacks. Some of the causes of data loss may include accidental deletion by an administrator or destruction resulting from natural disasters. In order to prevent data loss, deploying appropriate measures such as data backup or replication can reduce the impact of such events.

- Malicious Insiders - According to Computer Emergency Response Team (CERT), a malicious insider could be an organization’s current or former employee, contractor, or other business partner who has or had authorized access to an organization’s servers, network, or storage. These malicious insiders may intentionally misuse that access in ways that negatively impact the confidentiality, integrity, or availability of the organization’s information or resources. For example, consider a former employee of an organization who had access to the organization’s storage resources. This malicious insider may be aware of security weaknesses in that storage environment. This is a serious threat because the malicious insider may exploit the security weakness.

- Account Hacking - Account hijacking refers to a scenario in which an attacker gains access to an administrator’s or user’s account(s) using methods such as phishing or installing keystroke-logging malware on administrator’s or user’s systems. Phishing is an example of a social engineering attack that is used to deceive users.

- Insecure API's - Application programming interfaces (APIs) are used extensively in software-defined and cloud environment. It is used to integrate with management software to perform activities such as resource provisioning, configuration, monitoring, management, and orchestration. These APIs may be open or proprietary. The security of storage infrastructure depends upon the security of these APIs. An attacker may exploit vulnerability in an API to breach a storage infrastructure’s perimeter and carry out an attack. Therefore, APIs must be designed and developed following security best practices such as requiring authentication and authorization, input validation of APIs, and avoiding buffer overflows.

- Shared technology vulnerabilties - Shared technology vulnerabilties Technologies that are used to build today’s storage infrastructure provide a multi-tenant environment enabling the sharing of resources. Multi-tenancy is achieved by using controls that provide separation of resources such as memory and storage for each application. Failure of these controls may expose the confidential data of one business unit to users of other business units, raising security risks.

- Media Theft - Backups and replications are essential business continuity processes of any data center. However, inadequate security controls may expose organizations confidential information to an attacker. There is a risk of a backup tape being lost, stolen, or misplaced, and the threat is even severe especially if the tapes contain highly confidential information. An attacker may gain access to an organization’s confidential data by spoofing the identity of the DR site.

The Key Information Security Controls

Also Read: Factors affecting SAN performance

At the server level, security controls are deployed to secure hypervisors and hypervisor management systems, virtual machines, guest operating systems, and applications. Security at the network level commonly includes firewalls, demilitarized zones, intrusion detection and prevention systems, virtual private networks, zoning and iSNS discovery domains, port binding and fabric binding configurations, and VLAN and VSAN. At the storage level, security controls include LUN masking, data shredding, and data encryption. Apart from these security controls, the storage infrastructure also requires identity and access management, role-based access control, and physical security arrangements. The Key Information Security Controls are

- Physical Security

- Identity and Access Management

- Role-based Access Control

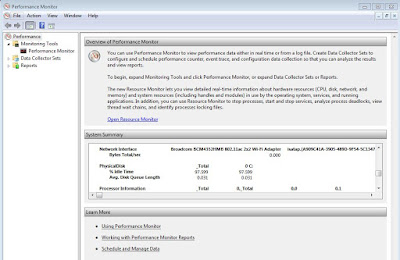

- Network Monitoring and Analysis

- Firewalls

- Intrusion Detection and Prevention System

- Adaptive Security

- Virtual Private Networks

- Virtual LAN & Virtual SAN

- Zoning and ISNS discovery domain

- Port binding and fabric binding

- Securing hypervisor and management server

- VM, OS and Application hardening

- Malware Protection Software

- Mobile Device Management

- LUN Masking

- Data Encryption

- Data Shredding

Previous: Introduction to Information Security

Go To >> Index Page