Cache is a semiconductor memory where data is placed temporarily to reduce the time required to service I/O requests from the hosts. Cache improves storage system performance by isolating compute systems from the storage (HDDs and SSDs).

The performance improvement is significantly higher when the back-end storage is hard disk drive (HDD). In this case, cache improves storage system performance by isolating hosts from the mechanical delays associated with rotating disks or HDDs. Rotating disks are the slowest component of an intelligent storage system. Data access on rotating disks usually takes several milliseconds because of seek time and rotational latency. Accessing data from cache is fast and typically takes less than a millisecond. On intelligent storage systems, write data is first placed in cache and then written to the storage.

How Cache Read/Write operations work in the Storage System

Read Operation

Read performance is measured in terms of the read hit ratio, or the hit rate, usually expressed as a percentage. This ratio is the number of read hits with respect to the total number of read requests. A higher read hit ratio improves the read performance.

Also Read: Solid State Drive (SSD) Overview

Also Read: Solid State Drive (SSD) Overview

Write Operation

When an I/O is written to cache and acknowledged, it is completed in less time from the host’s perspective than it would take to write directly to storage. Write operations with cache provide performance advantages over writing directly to storage.

Sequential writes also offer opportunities for optimization because many smaller writes can be coalesced for larger transfers to storage with the use of cache. A write operation with cache is implemented in the following ways

- Write-through cache: Data is placed in the cache and immediately written to the storage, and an acknowledgement is sent to the host. Because data is committed to storage as it arrives, the risks of data loss are low, but the write-response time is longer because of the storage operations.

- Write-back cache: Data is placed in cache and an acknowledgement is sent to the host immediately. Later, data from several writes are committed (de-staged) to the storage. Write response times are much faster because the write operations are isolated from the storage devices. However, uncommitted data is at risk of loss if cache failures occur.

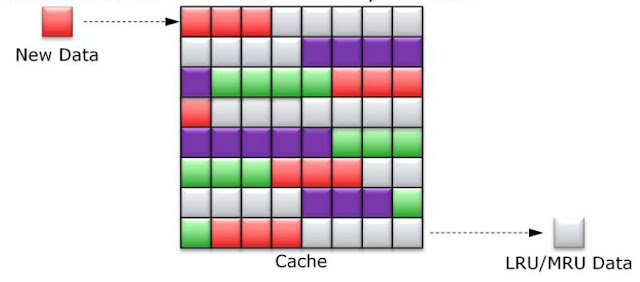

Managing Cache

Cache is an expensive resource that needs proper management. Even though modern intelligent storage systems come with a large amount of cache, when all cache pages are filled, some pages have to be freed up to accommodate new data and avoid performance degradation. Various cache management algorithms are implemented in intelligent storage systems to proactively maintain a set of free pages and a list of pages that can be potentially freed up whenever required. The most commonly used algorithms are

- Least Recently Used (LRU): An algorithm that continuously monitors data access in cache and identifies the cache pages that have not been accessed for a long time. LRU either frees up these pages or marks them for reuse. This algorithm is based on the assumption that data that has not been accessed for a while will not be requested by the hosts. However, if a page contains write data that has not yet been committed to storage, the data is first written to the storage before the page is reused.

- Most Recently Used (MRU): This algorithm is the opposite of LRU, where the pages that have been accessed most recently are freed up or marked for reuse. This algorithm is based on the assumption that recently accessed data may not be required for a while.

Also Read: Accessing data from the Intelligent Storage Systems

As cache fills, the storage system must take action to flush dirty pages (data written into the cache but not yet written to the storage) to manage space availability. Flushing is the process that commits data from cache to the storage. On the basis of the I/O access rate and pattern, high and low levels called watermarks are set in cache to manage the flushing process. High watermark (HWM) is the cache utilization level at which the storage system starts high-speed flushing of cache data. Low watermark (LWM) is the point at which the storage system stops flushing data to the storage drives. The cache utilization level drives the mode of flushing to be used:

As cache fills, the storage system must take action to flush dirty pages (data written into the cache but not yet written to the storage) to manage space availability. Flushing is the process that commits data from cache to the storage. On the basis of the I/O access rate and pattern, high and low levels called watermarks are set in cache to manage the flushing process. High watermark (HWM) is the cache utilization level at which the storage system starts high-speed flushing of cache data. Low watermark (LWM) is the point at which the storage system stops flushing data to the storage drives. The cache utilization level drives the mode of flushing to be used:

- Idle flushing: It occurs continuously, at a modest rate, when the cache utilization level is between the high and the low watermark.

- High watermark flushing: It is activated when cache utilization hits the high watermark. The storage system dedicates some additional resources for flushing. This type of flushing has some impact on I/O processing.

- Forced flushing: It occurs in the event of a large I/O burst when cache reaches 100 percent of its capacity, which significantly affects the I/O response time. In forced flushing, system flushes the cache on priority by allocating more resources. The rate of flushing and the rate of acceptance of compute system I/O into cache is managed dynamically to optimize the storage system performance.

Protecting the Cache

Cache is volatile memory, so a power failure or any kind of cache failure will cause loss of the data that is not yet committed to the storage drive. This risk of losing uncommitted data held in cache can be mitigated using cache mirroring and cache vaulting

- Cache mirroring: Each write to cache is held in two different memory locations on two independent memory cards. If a cache failure occurs, the write data will still be safe in the mirrored location and can be committed to the storage drive. Because only writes are mirrored, this method results in better utilization of the available cache. In cache mirroring approaches, the problem of maintaining cache coherency is introduced. Cache coherency means that data in two different cache locations must be identical at all times. It is the responsibility of the storage system's operating environment to ensure coherency.

- Cache vaulting: The risk of data loss due to power failure can be addressed in various ways: powering the memory with a battery until the AC power is restored or using battery power to write the cache content to the storage drives. If an extended power failure occurs, using batteries is not a viable option. This is because in intelligent storage systems, large amounts of data might need to be committed to numerous storage drives, and batteries might not provide power for sufficient time to write each piece of data to its intended storage drive. Therefore, storage vendors use a set of physical storage drives to dump the contents of cache during power failure. This is called cache vaulting and the storage drives are called vault drives. When power is restored, data from these storage drives is written back to write cache and then written to the intended drives.

Go To >> Index Page

What Others are Reading Now...

0 Comment to "7.2 Importance of Cache technique in Block Based Storage Systems"

Post a Comment