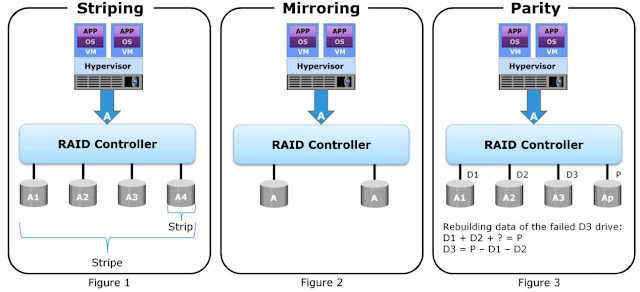

RAID 0 does not offer data redundancy as it does not use parity or mirroring techniques. RAID 0 uses striping technique to store the data without using the parity. The striping means that data is spread over all the drives in the RAID set, yielding parallelism.

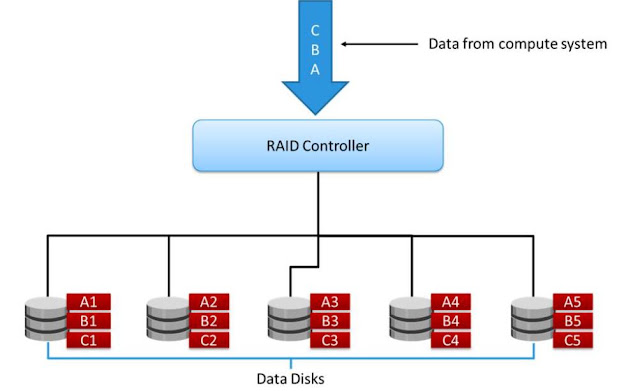

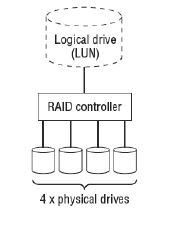

RAID 0 configuration uses data striping techniques, where data is striped across all the disks within a RAID set. Therefore it utilizes the full storage capacity of a RAID set. To read data, all the strips are gathered by the controller. When the number of drives in the RAID set increases, the performance improves because more data can be read or written simultaneously.

RAID 0 configuration uses data striping techniques, where data is striped across all the disks within a RAID set. Therefore it utilizes the full storage capacity of a RAID set. To read data, all the strips are gathered by the controller. When the number of drives in the RAID set increases, the performance improves because more data can be read or written simultaneously.

RAID 0 is a good option for applications that need high I/O throughput. However, if these applications require high availability during drive failures, RAID 0 does not provide data protection and availability. When any drive in the RAID 0 fails, all the data in that failed drive is lost and it is unrecoverable unless you have other types of protection (backup or replication).

RAID 0 Use Cases

Choose RAID 0 if- The data is 100 percent scratch, losing it is not a problem, and you need as much capacity out of your drives as possible.

- As long as there is some other form of data protection such as network RAID or a replica copy that can be used in the event that you lose data in your RAID 0 set.

- If you want to create a striped logical volume on top of volumes that are already RAID protected. such as creating volumes on already RAID protected LVM in Linux servers.

Advantages

- RAID 0 offers great performance, both in read and write operations. There is no overhead caused by parity controls.

- All storage capacity is used, there is no overhead.

- The technology is easy to implement.

Disadvantages

RAID 0 is not fault-tolerant. If one drive fails, all data in the RAID 0 array are lost. It should not be used for mission-critical systems.