How to define Backup and Archive Copy Groups

After defining management class, you have to define backup and archive copy groups which contains the parameters that control the generation and expiration of backup and archive data.

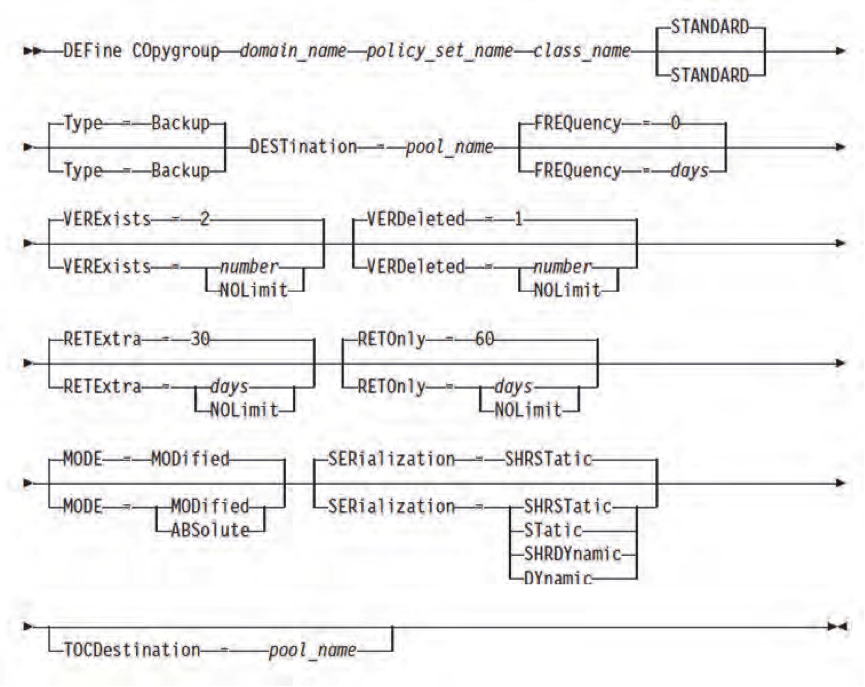

Defining BACKUP Copy Group

Use the following command syntax to define a backup copy group

DOMAIN_NAME (Required): Specifies the name of the policy domain that you are defining the copy group for.

POLICY_SET_NAME (Required): Specifies the name of the policy set that you are defining the copy group for.

CLASS_NAME (Required): Specifies the name of the management class that you are defining the copy group for.

STANDARD (Optional): Specifies the name of the copy group as STANDARD. The name of the copy group must be STANDARD, which is the default value.

TYPE=Backup (Optional): Specifies that you want to define a backup copy group. The default parameter is BACKUP.

VEREXISTS (Optional): Specifies the maximum number of backup versions to retain for files that are currently on the client file system. The default value is 2. The other option is NOLIMIT.

VERDELETE (Optional): Specifies the maximum number of backup versions to retain for files that are deleted from the client file system after using Tivoli Storage Manager to back them up. The default value is 1. The other option is NOLIMIT.

RETEXTRA (Optional): Specifies the number of days to retain a backup version after that version becomes inactive. A version of a file becomes inactive when the client stores a more recent backup version, or when the client deletes the file from the workstation and then runs a full incremental backup. The server deletes inactive versions that are based on retention time even if the number of inactive versions does not exceed the number allowed by the VEREXISTS or VERDELETED parameters. The default value is 30 days. The other option is NOLIMIT.

RETONLY (Optional): Specifies the number of days to retain the last backup version of a file that is deleted from the client file system. The default value is 60. The other option is NOLIMIT.

DESTINATION (Required): Specifies the name of a storage pool that is defined. BACKUPPOOL is the default for the backup copy group, and ARCHIVEPOOL is the default for the archive copy group.

FREQUENCY=freqvalue (Optional): Specifies the minimum interval, in days, between successive backups.

MODE=mode (Optional): Specifies whether a file is backed up based on changes made to the file since the last time that it is backed up. Use the MODE value only for incremental backup. This value is bypassed during selective backup. The default value is MODIFIED. The other option is ABSOLUTE, which is bypassed if the file is modified.

SERIALIZATION=serialvalue (Optional): Specifies handling of files or directories if they are modified during backup processing and also actions for Tivoli Storage Manager to take if a modification occurs. The default value is SHRSTATIC.

The SHRSTATIC parameter specifies that Tivoli Storage Manager backs up a file or directory only if it is not modified during the backup or archive operation. Tivoli Storage Manager attempts to perform a backup or archive operation as many as four times, depending on the value specified for the CHANGINGRETRIES client option. If the file or directory is modified during each backup or archive attempt, Tivoli Storage Manager does not process it. Other options are STATIC, SHRDYNAMIC, and DYNAMIC.

VEREXISTS (Optional): Specifies the maximum number of backup versions to retain for files that are currently on the client file system. The default value is 2. The other option is NOLIMIT.

VERDELETE (Optional): Specifies the maximum number of backup versions to retain for files that are deleted from the client file system after using Tivoli Storage Manager to back them up. The default value is 1. The other option is NOLIMIT.

RETEXTRA (Optional): Specifies the number of days to retain a backup version after that version becomes inactive. A version of a file becomes inactive when the client stores a more recent backup version, or when the client deletes the file from the workstation and then runs a full incremental backup. The server deletes inactive versions that are based on retention time even if the number of inactive versions does not exceed the number allowed by the VEREXISTS or VERDELETED parameters. The default value is 30 days. The other option is NOLIMIT.

RETONLY (Optional): Specifies the number of days to retain the last backup version of a file that is deleted from the client file system. The default value is 60. The other option is NOLIMIT.

DESTINATION (Required): Specifies the name of a storage pool that is defined. BACKUPPOOL is the default for the backup copy group, and ARCHIVEPOOL is the default for the archive copy group.

FREQUENCY=freqvalue (Optional): Specifies the minimum interval, in days, between successive backups.

MODE=mode (Optional): Specifies whether a file is backed up based on changes made to the file since the last time that it is backed up. Use the MODE value only for incremental backup. This value is bypassed during selective backup. The default value is MODIFIED. The other option is ABSOLUTE, which is bypassed if the file is modified.

SERIALIZATION=serialvalue (Optional): Specifies handling of files or directories if they are modified during backup processing and also actions for Tivoli Storage Manager to take if a modification occurs. The default value is SHRSTATIC.

The SHRSTATIC parameter specifies that Tivoli Storage Manager backs up a file or directory only if it is not modified during the backup or archive operation. Tivoli Storage Manager attempts to perform a backup or archive operation as many as four times, depending on the value specified for the CHANGINGRETRIES client option. If the file or directory is modified during each backup or archive attempt, Tivoli Storage Manager does not process it. Other options are STATIC, SHRDYNAMIC, and DYNAMIC.

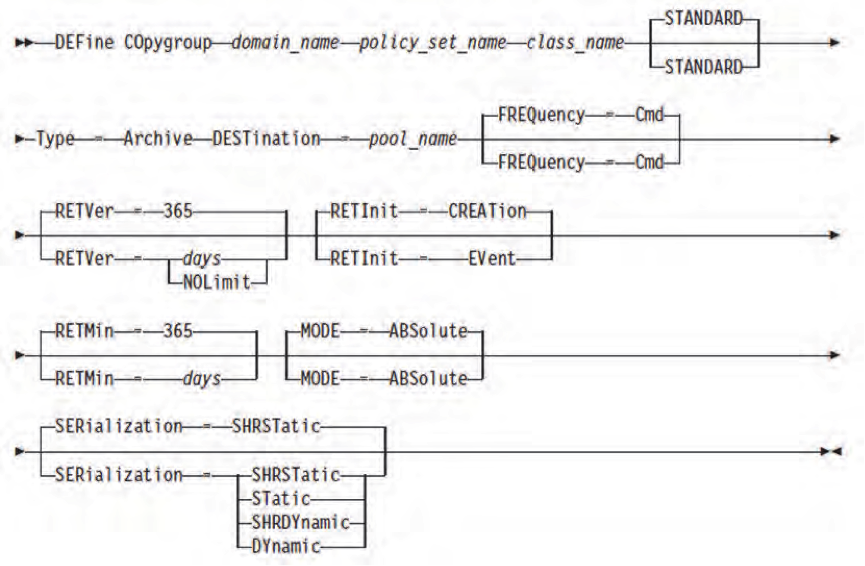

Defining Archive Copy Group

Use the following command syntax to define a archive copy group

For archive copy group, we have different retention parameters as shown above and remaining parameters are same

TYPE=Archive (Required): Specifies that you want to define an archive copy group. The

default parameter is BACKUP.

RETVER (Optional): Specifies the number of days to keep an archive copy. The default value is 365. The other option is NOLIMIT.

RETINIT (Optional): Specifies the trigger to initiate the retention time that the RETVER attribute specifies. The default value is CREATION. The other option is EVENT.

RETMIN (Optional): Specifies the minimum number of days to keep an archive copy after it is archived. The default value is 365.

Use the following command syntax to define a archive copy group

For archive copy group, we have different retention parameters as shown above and remaining parameters are same

TYPE=Archive (Required): Specifies that you want to define an archive copy group. The

default parameter is BACKUP.

RETVER (Optional): Specifies the number of days to keep an archive copy. The default value is 365. The other option is NOLIMIT.

RETINIT (Optional): Specifies the trigger to initiate the retention time that the RETVER attribute specifies. The default value is CREATION. The other option is EVENT.

RETMIN (Optional): Specifies the minimum number of days to keep an archive copy after it is archived. The default value is 365.

Assigning Default Management Class

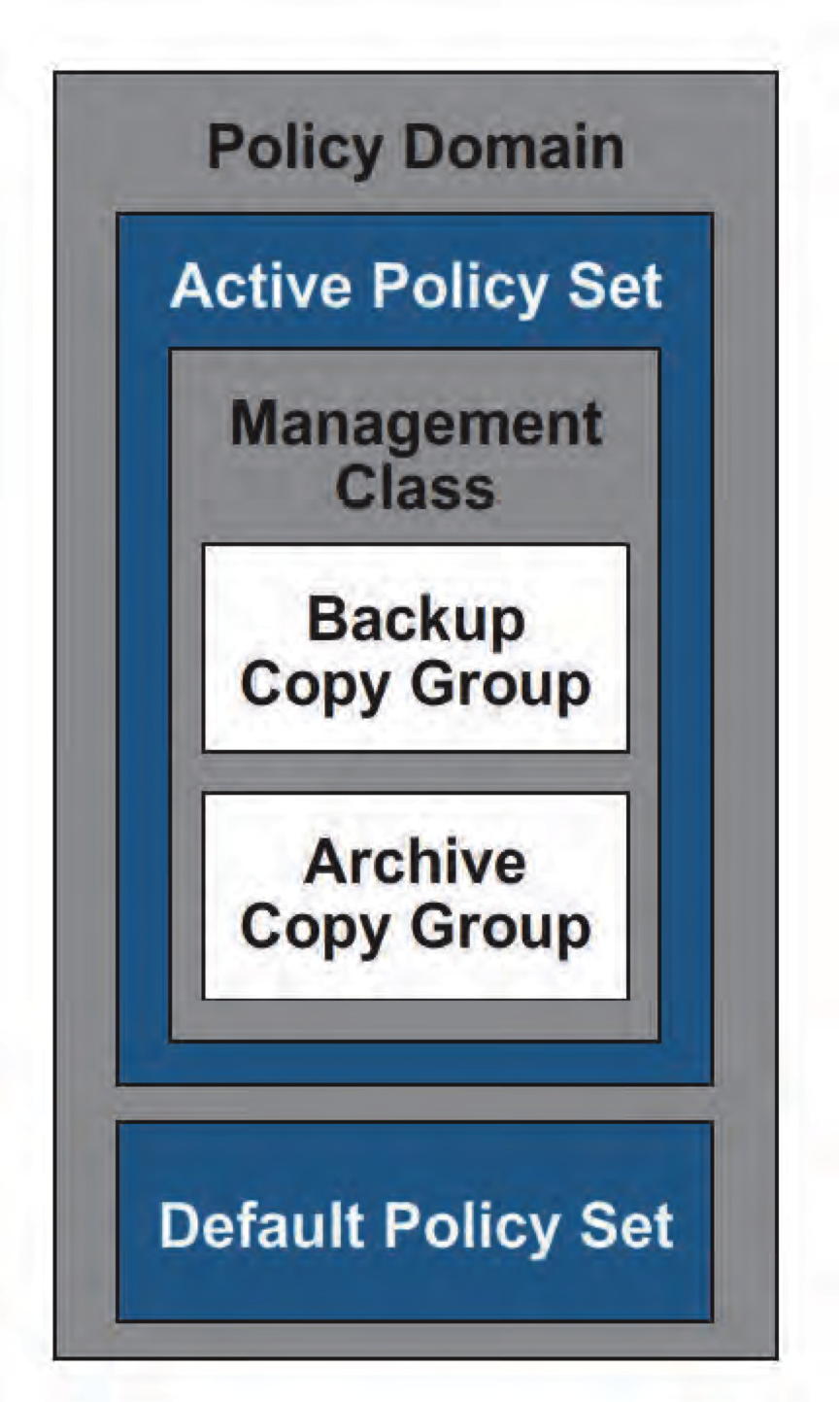

Each policy set contains a default management class and can contain any number of additional management classes. Use policy sets to implement different policies, based on user and business requirements.

You must assign a default management class for a policy set before you can activate that policy set. Use the assign defmgmtclass command to specify an existing management class as the default management class for a particular policy set. Ensure that the default management class contains both an archive copy group and a backup copy group.

ASsign DEFMGmtclass domainname setname classname

Validating and activating Policy Sets

The validate command examines the management class and copy group definitions in a specified policy set. It reports on conditions that need consideration if the policy set is activated. After a change is made to a policy set and the policy set is validated, you must activate the policy set to make it the ACTIVE policy set. The validate policy set command fails if any of the following conditions exist

- A default management class is not defined for the policy set.

- A copy group within the policy set specifies a copy storage pool as a destination.

- A management class specifies a copy pool as the destination for space-managed files.

When a policy set is activated, the contents of the policy set are copied to a policy set that has the reserved name ACTIVE. When activated, the policy set that is activated, copied to ACTIVE, and the contents of the ACTIVE policy set have no relationship. You can still modify the original policy set, but the copied definitions in the ACTIVE policy set can be modified only by activating another policy set.

Use the validate policyset command to verify that a policy set is complete and valid before you activate it

VALidate POlicyset domainname policysetname

Then, use the activate policyset command to specify a policy set as the active policy set for a policy domain

ACTivate POlicyset domainname policysetname

When a policy set is activated, its contents are copied to a policy set with the reserved name, ACTIVE.