The next generation IT platform is built on social networking, mobile computing, cloud services, and big data analytics technologies. Applications that support these technologies require significantly higher performance, scalability, and availability compared to the traditional applications. Storage technology solutions that can meet the these requirements are:

- Advanced Storage Area Network (SAN)

- Different types of SAN implementations such as Fibre Channel (FC) SAN, Internet Protocol (IP) SAN, Fibre Channel over Ethernet (FCoE) SAN

- Virtualization in SAN

- Software-defined networking

We will look into all these SAN connectivity protocols in next 3 chapter. First let us understand what is SAN ?

What is Storage Area Network (SAN) ?

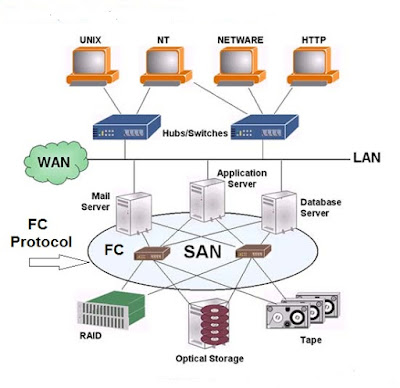

According to EMC definition, Storage area network (SAN) is a network that primarily connects the storage systems with the compute systems and also connects the storage systems with each other. It enables multiple compute systems to access and share storage resources. It also enables data transfer between the storage systems. With long-distance SAN, the data transfer over SAN can be extended across geographic locations. A SAN usually provides access to block-based storage systems.

A SAN may span over wide locations. This enables organizations to connect geographically dispersed compute systems and storage systems. The long-distance SAN connectivity enables the compute systems across locations to access shared data. The long-distance connectivity also enables the replication of data between storage systems that reside in separate locations. The replication over long-distances helps in protecting data against local and regional disaster. Further, the long-distance SAN connectivity facilitates remote backup of application data. Backup data can be transferred through a SAN to a backup device that may reside at a remote location.

These long distance SAN implementations is made possible by using various kind of SAN implementations such as Fibre Channel SAN (FC SAN).

These long distance SAN implementations is made possible by using various kind of SAN implementations such as Fibre Channel SAN (FC SAN).

What is Fibre Channel SAN (FC SAN) ?

Fibre Channel SAN (FC SAN) uses Fibre Channel (FC) protocol for communication. FC protocol (FCP) is used to transport data, commands, and status information between the compute systems and the storage systems. It is also used to transfer data between the storage systems.

FC is a high-speed network technology that runs on high-speed optical fibre cables and serial copper cables. The FC technology was developed to meet the demand for the increased speed of data transfer between compute systems and mass storage systems.

The latest FC implementations of 16 GFC offer a throughput of 3200 MB/s (raw bit rates of 16 Gb/s), whereas Ultra640 SCSI is available with a throughput of 640 MB/s. FC is expected to come with 6400 MB/s (raw bit rates of 32 Gb/s) and 25600 MB/s (raw bit rates of 128 Gb/s) throughput in 2016. Technical Committee T11, which is the committee within International Committee for Information Technology Standards (INCITS), is responsible for FC interface standards.

The flow control mechanism in FC SAN delivers data as fast as the destination buffer is able to receive it, without dropping frames. FC also has very little transmission overhead. The FC architecture is highly scalable, and theoretically, a single FC SAN can accommodate approximately 15 million devices.

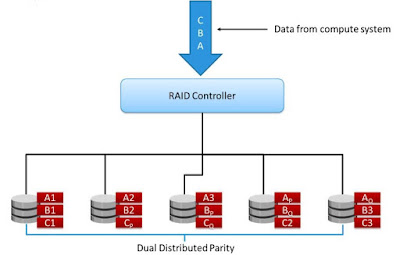

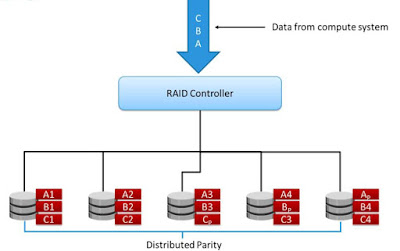

Previous: 3.9 The next generation RAID techniques

Previous: 3.9 The next generation RAID techniques