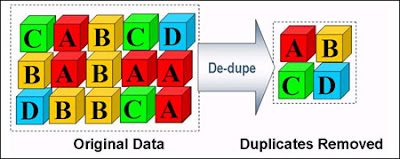

Deduplication is also one of the Storage Capacity Optimization techniques which will identify the duplicate data and making sure that duplicate data is not stored again. Storage systems that implement deduplication technique achieve this by inspecting data and checking whether copies of the data already exist in the system. If a copy of this data already exists, instead of storing additional copies, pointers are used to point to the copy of the data.

When replacing multiple copies of the same data with a single copy plus a pointer for each additional copy, so that the pointers consume virtually no space, especially when compared to the data they are replacing. Deduplication is similar to but subtly different from compression. Deduplication identifies data patterns that have been seen before, but unlike compression, these patterns don’t have to be close to each other in a file or data set. Deduplication works across files and directories and can even work across entire systems that are petabytes (PB) in size.

Also Read: What are Intelligent Storage Systems

Also Read: What are Intelligent Storage Systems

Deduplication is widely deployed in backup and archive scenarios and it is not largely implemented on HDD based storage arrays. However, many of the all-flash storage arrays now implement data deduplication techniques. Remember that the data which is compressed can be deduplicated but the data which is compressed cannot normally be deduplicated.

There are 2 types of Deduplication Techniques

- File Level Deduplication

- Block Level Deduplication

File level Deduplication is sometimes referred to as single instance storage which looks for exact duplicates at the file level and whereas block level deduplication works at a far smaller granularity than whole files. Because it works at a much finer granularity than files, it can give far greater deduplication ratios and efficiencies.

File-level deduplication is a basic or initial form of deduplication. In order for two files to be considered duplicates, they must be exactly the same. This type of deduplication does not give you desired results as it can only deduplicate files which are having same file names.

Whereas, deduplicating at the block level is a far better approach than deduplicating at the file level. Block-level deduplication is more granular than file-level deduplication, resulting in far better results. The simplest form of block-level deduplication is fixed block, sometimes referred to as fixed-length segment. Fixed-block deduplication takes a predetermined block size such as 16KB and breaks up a stream of data into segments of that block size. It then inspects these 16 KB segments and checks whether it already has copies of them.

How Deduplication Works in SAN (Hashing and Fingerprinting)

Every block of data that is sent to and stored in a deduplicating system is given a digital fingerprint (unique hash value). These fingerprints are indexed and stored so they can be looked up very quickly. These are often stored in cache for fast access. If the index is lost, it can usually be re-created, although the process of re-creating can be long and keep the system out of service while it’s being re-created.

Also Read: The next generation RAID techniques

Also Read: The next generation RAID techniques

When new writes come into a system that deduplicates data, these new writes are segmented, and each segment is then fingerprinted. The fingerprint is compared to the index of known fingerprints in the system. If a match is found, the system knows that the incoming data is a duplicate (or potential duplicate) block and replaces the incoming block with a much smaller pointer that points to the copy of the block already stored in the system.

Where to enable Deduplication in SAN

Deduplication can be implemented in various places. Generally in backup scenarios deduplication is done in either

- Source Based Deduplication

- Target Based Deduplication

- Federated Deduplication

It is also know as Client-side deduplication in IBM TSAM backup tool, which requires an agent software to be installed on the host. Deduplication is done at the host which is the source and the process consumes host resources including CPU and RAM. However, source based deduplication can significantly reduce the amount of network bandwidth consumed between the source and target. This is useful in the backup scenarios where large amount of data has to be backedup through the network.

Target-Based Deduplication

Target-based deduplication, sometimes referred to as hardware-based deduplication or Destination side deduplication which is widey used in current backup environments. In target-based deduplication, the process of deduplication occurs on the target machine, such as a deduplicating backup appliance. These appliances tend to be purpose-built appliances with their own CPU, RAM, and persistent storage (disk). This approach relieves the host (source) of the burden of deduplicating, but it does nothing to reduce network bandwidth consumption between source and target. It is also common for deduplicating backup appliances to deduplicate or compress data being replicated between pairs of deduplicating backup appliances.

Federated deduplication is a distributed approach to the process of deduplicating data. It is effectively a combination of source and target based, giving you the best of both techniques which combines the best deduplication ratios as well as reduced network bandwidth between source and target. This federated approach, whereby the source and target both perform deduplication, can improve performance by reducing network bandwidth between the source and target, as well as potentially improving the throughput capability of the target deduplication appliance. The latter is achieved by the data effectively being pre-deduplicated at the source before it reaches the target. It is now common for many backup solutions to offer fully federated approaches to deduplication, where deduplication occurs at both the source and target.

Using Deduplication Techniques in Virtuaization Environment

Deduplication can be well deployed in virtualization technologies such as server and desktop virtualization. These environments tend to get good deduplication because the number of virtual Windows servers will share a very high percentage of the same core files and blocks (VM templates). The same goes for other operating systems such as Linux.

Also Read: Importance of Cache technique in Block Based Storage Systems

Also Read: Importance of Cache technique in Block Based Storage Systems

For example, if you have a 100 TB image of a Windows server and deploy 1000 servers from that image, those 1000 servers will share a massive number of identical files and blocks. These will obviously deduplicate very well. Deduplication like this can also lead to potential performance improvements if you have a large read cache, because many of these shared files and blocks will be highly frequently read by all virtual machines, causing them to remain in read cache where they can be accessed extremely quickly.

Using Deduplication Techniques in Cloud

Using Deduplication in tiered storage and cloud environment also a good choice because acccessing data from lower tier storage and especially from the cloud is accepted to be slow. Adding the overhead of deduplication will not decrease the performance but it can be significantly reduce the capacity utilization.

Factors affecting Deduplication in backups

Data deduplication performance (or ratio) is tied to the following factors

- Retention period: This is the period of time that defines how long the backup copies are retained. The longer the retention, the greater is the chance of identical data existence in the backup set which would increase the deduplication ratio and storage space savings.

- Frequency of full backup: As more full backups are performed, it increases the amount of same data being repeatedly backed up. So, it results in high deduplication ratio.

- Change rate: This is the rate at which the data received from the backup application changes from backup to backup. Client data with a few changes between backups produces higher deduplication ratios.

- Data type: Backups of user data such as text documents, PowerPoint presentations, spreadsheets, and e-mails are known to contain redundant data and are good deduplication candidates. Other data such as audio, video, and scanned images are highly unique and typically do not yield good deduplication ratio.

- Deduplication method: Deduplication method also determines the effective deduplication ratio. Variable-length, sub-file deduplication discover the highest amount of deduplication of data.

Go To >> Index Page

What Others are Reading Now...

I am impressed with this site, very I am a fan .

ReplyDelete