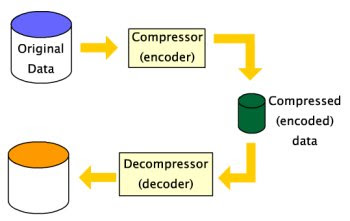

Compression is one of the Storage capacity optimization technique which can help organizations to store more data by using less storage capacity. For example, a 500 MB file may be compressed down to 50 MB, allowing you to store that 500 MB file using only 50 MB of disk space. Compression also allows you to transmit data over networks quicker while consuming less bandwidth. All in all, it is a good capacity optimization technology which can be prefferred to be used in SAN infrastructures.

Compression is often file based, and many people are familiar with technologies such as WinZip, which allows you to compress and decompress individual files and folders and is

especially useful for transferring large files over communications networks. Other types of compression include filesystem compression, storage array based compression, and backup compression.

- Filesystem compression works by compressing all files written to a filesystem.

- Storage array based compression works by compressing all data written to volumes in the storage array.

- And backup compression works by compressing data that is being backed up or written to tape.

There are two major types of compression techniques which can be deployed in SAN infrastructure.

- Lossless compression

- Lossy compression

By the name itself we can understand that no information is lost with lossless compression, whereas some information is lost with lossy compression.

One popular form of lossy compression is JPEG image compression which uses a lossy compression algorithm. Lossy compression technologies like these work by removing detail that will not affect the overall quality of the data. It is common for the human eye not to be able to recognize the difference between a compressed and non compressed image file, even though the compressed image has lost a lot of the detail. Now, lets see how compression technique generally works in SAN infrastructure

How Compression Works in SAN Infrastructure

A simple example is a graphic that has a large, blue square in the corner. In the file, this

area of blue could be defined as follows

Vector 0,0 = red

Vector 0,1 = red

Vector 0,2 = red

Vector 0,3 = red

---

---

Vector 100,99 = red

vectors 0,0 through 100,99 = red

Also Read: Importance of Cache technique in Block Based Storage Systems

So, it identified a pattern and re-encoded that pattern into something else that tells us exactly the same thing. We haven’t lost anything in our compression, but the file takes up fewer bits and bytes. This is just an example and real time compressions are far more advanced than this.

So, it identified a pattern and re-encoded that pattern into something else that tells us exactly the same thing. We haven’t lost anything in our compression, but the file takes up fewer bits and bytes. This is just an example and real time compressions are far more advanced than this.

However, most organizations doesnt feel comfortable to deploy compression techniques in the storage systems due its unwanted side-effect of reducing storage performance if not properly configured. Reduced storage performance can be noticed in both HHD's and SSD's.

Where to enable Compression in SAN

There are two common compression approaches which can be deployed in storage array for primary storage and as well as for backup and archive.

- Inline Compression

- Post-process Compression

Inline compression compresses data while it is in cache before it sent to disk. This can consume CPU and cache resources and if there is high I/O in use, compressing and decompressing can increase I/O latency. However, inline compression reduces the amount of data being written to the backend disks which means less capacity is is required both on the internal bus as well as on the storage drives. Also the amount of bandwidth required on the internal bus of a storage.

Storage Arrays that performs inline compression can be significantly less than on a system that does not perform inline compression. This is because data is compressed in cache before being sent to the drives on the backend over the internal bus.

Post-process Compression

In post-process compression, data has to be written to disk in its uncompressed form, and then compressed at a later time. This avoids any potential negative performance impact of the compression operation, but it also avoids any potential positive performance impact. In addition, the major drawback to post-process compression is that it demands that you have enough storage capacity to land the data in its uncompressed form, where it remains until it is compressed at a later time.

Nevertheless, whether compression is done inline or post process, decompression will obviously have to be done inline in real time.

Performance Implications of using Compression in SAN Environment

Performance is important in primary storage systems. A major performance consideration relating to compression and primary storage is the time it takes to decompress data that has already been compressed. For example, when a host issues a read request for some data, if that data is already in cache, the data is served from cache in what is known as a cache hit. Cache hits are extremely fast. However, if the data being read isn’t already in cache, it has to be read from disk. This is known as a cache miss. Cache misses are slow, especially on HDD's.

Now let’s mix decompression into the above scenario. Every time a cache miss occurs, not only do we have to request the data from disk on the backend, we also have to decompress the data. Depending on the compression algorithm deployed, decompressing data can add a significant delay to the process. Because of this, many storage technologies make a trade-off between capacity and performance and use compression technologies based on Lempel-Ziv (LZ). LZ compression doesn’t give the best compression rate but it has relatively low overhead and can decompress data fast.

Modern CPUs now ship with native compression features and enough free cycles to deal with tasks such as compression. Also, solid-state technologies such as flash memory are driving adoption of compression. After all, compression allows more data to be stored on flash memory, and flash memory helps avoid some of the performance impact imposed by compression technologies.

However, the new compression techniques are evolving to address the storage demands but it is recommended to test before you deploy into production. Compression changes the way things work, and you need to be aware of the impact of those changes before deploying.

Previous: Traditional and Virtual Storage Provisioning Overview

Go To >> Index Page

Previous: Traditional and Virtual Storage Provisioning Overview

Go To >> Index Page

0 Comment to "7.5 Using Compression techniques in SAN infrastrucutre"

Post a Comment